From Nov. 27 to Dec. 1, cloud computing behemoth AWS held the 2023 edition of its annual summit “re:Invent” in Las Vegas, Nevada.

AWS bills the event as a learning conference and this year’s iteration offered upskilling opportunities in spades. Its over 50,000 attendees had access to more than 2,200 bootcamps, builder labs, and breakout sessions where they could learn from Amazon experts and partners on how to best leverage AWS products.

Naturally, re:Invent also provides a platform for AWS to spotlight fresh innovations. This year, generative artificial intelligence (gen AI) products and services took center-stage, but other highlights included S3, general computing chip, and instance upgrades.

Accelerating gen AI building, training, and customization for businesses

Enterprises are past the point of discussing gen AI’s potential. Now, they are considering how they should maximize this technology to bring the most value.

AWS intends to support every organization’s gen AI journey, whether they will tackle the daunting task of building their own Foundation Models (FMs), choose among existing FMs to develop their own apps, or customize pre-made apps for their needs.

For entities building FMs or Large Language Models (LLMs) from the ground up, Amazon is supplying the necessary infrastructure to handle this compute-intensive undertaking with their AWS Trainium2 chip that will be arriving in 2024.

Like the original Tranium chip, Trainium2 is optimized to handle gen AI and ML Training. This chip, though, is four times faster than its predecessor and is designed to train FMs with hundreds of billions to trillions of parameters.

AWS also aims to support firms that prefer saving on costs and resources by customizing existing LLMs and FMs to build their own gen AI apps.

For these clients, AWS released numerous expansions for Amazon Bedrock, its managed service that allows AWS clients to choose from and personalize a variety of FMs and LLMs owned by Amazon or some of the world’s top gen AI companies.

One such expansion is access to new or upgraded versions of LLMs. These include Anthropic’s Claude 2.1, which claims to output 50 percent less hallucinations from the previous version, Meta’s Llama 270B, which is specialized for chat, and Amazon’s new Titan Foundation models, Titan Text Lite and Titan Text Express, which are smaller and more cost-effective models built for text-related tasks.

Since AWS knows that to derive the most benefit from generalized FMs, FMs need to be personalized to work with enterprises’ data, Amazon Bedrock’s next expansion was customization capabilities.

The first of these capabilities is support for fine-tuning, the process where models learn from labelled data provided by the business to generate tailored responses.

This feature was made generally available on Cohere Command Lite, Meta Llama 2, and Amazon Titan Text models during the event. AWS added that this feature will also be coming to Anthropic Claude soon.

Another customization capability made generally available during the event is Knowledge Bases for Amazon Bedrock, which is based on the Retrieval Augmented Generation (RAG) technique that enables clients to modify a model’s outputs to consider new or updated information.

This feature aims to streamline the development of gen AI apps that utilize proprietary data to deliver updated responses, like chatbots and question-answering systems.

Another significant expansion released is Guardrails for Amazon Bedrock. Hoping to underscore Amazon’s commitment to the ethical use of AI, this feature currently enables clients to configure harmful content filters based on responsible AI principles and apply them to FMs or agents. In the future, it will even allow information to be redacted from FM responses.

For enterprises that want to take full advantage of existing apps, AWS rolled out on preview Amazon Q, the first gen AI powered assistant made specifically for work that can be tailored to businesses. This assistant was designed to securely learn a company’s information, code, and systems to provide interactive responses specific to their needs and context.

Amazon Q is envisioned to assist multiple roles. It can support developers and builders by guiding them through new, unfamiliar AWS capabilities, architecting solutions, troubleshooting, and even do the heavy lifting when upgrading languages on AWS.

Business analysts can benefit because they can simply instruct Amazon Q what to visualize and the assistant will instantly generate dashboards, charts, and reports. It can even help employees across the company retrieve information appropriate to their position and synthesize it into comprehensible chunks.

To cap off these gen AI announcements, AWS discussed a deepening of their partnership with GPU manufacturer, NVIDIA, that will bring the NVIDIA DGX Cloud to AWS. Known as their “AI factory”, this NVIDIA platform exponentially speeds up the training of large models.

Continuously improving storage and compute power

On top of these infrastructure and tools to help companies easily harness gen AI, AWS unveiled several updates to other products.

Regarding storage, they launched the S3 Express One Zone, a new S3 storage class meant for businesses most frequently accessed data. It is built on hardware and software designed to accelerate data processing and to enable the highest performance and lowest latency cloud object storage. Moreover, it allows businesses to have 50-percent lower access costs than standard S3.

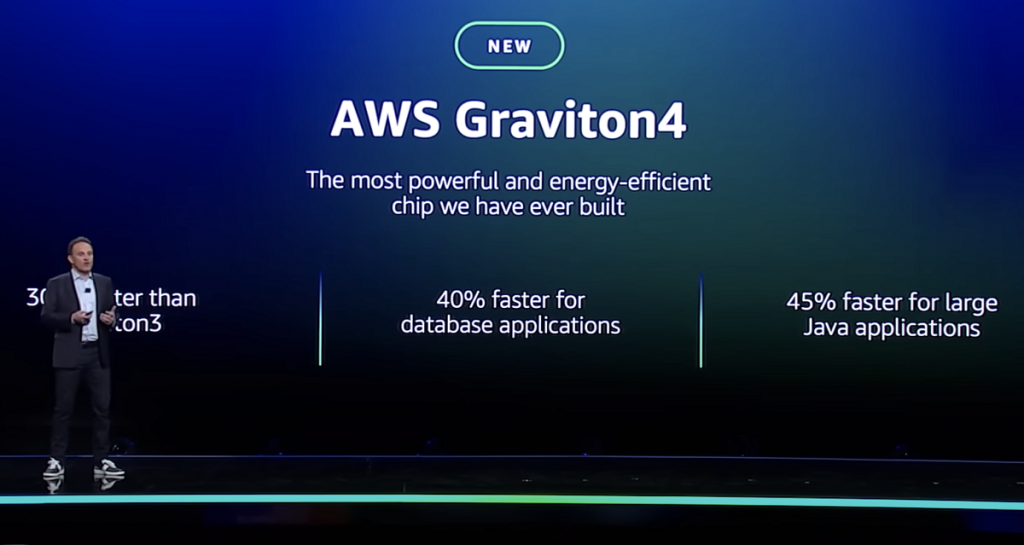

The company also launched the Graviton4 chip, the most powerful and energy-efficient general-purpose compute processing chip ever built by AWS. It is 30-percent faster than Graviton3, 40-percent faster for database applications, and 45-percent faster for large Java applications.

Relatedly, they also released on preview the R8G Instances for EC2 powered by the Graviton chip. The first instance based on Graviton4, it is built to be cost-effective and efficient for workloads that process large data sets and memory, such as big data analytics and databases.

“The exciting challenge ahead of all of us this next year is to look closely at our work and reimagine it, to reinvent it, to dream up experiences that were previously impossible and make them real,” AWS chief executive Adam Selipsky said during the event.

“We’re so excited be your partner in this new world we’re building together,”